Challenge results are published. Top 4 teams are invited to submit the GC papers to the APSIPAASC 2025. More information please refer to "Paper Submission Guidelines".

Deadlines extended: Final submissions now due August 8 (was August 1) and results announced August 10 (was August 8).

Participants are required to submit the codes and model checkpoints for reproducing the results, more information please refer to "Results Submission".

There is no team registration requirement for this year's challenge.

2025-July-22 The metadata of the evaluation dataset is available at https://github.com/JishengBai/APSIPA2025GC-ASC/tree/main/metadata .

2025-June-15 The challenge has started and the metadata of the development dataset is available at https://github.com/JishengBai/APSIPA2025GC-ASC/tree/main/metadata.

| Rank | Team Name | Score(Macro-accuracy) | Technical Report | Bus | Airport | Metro | Restaurant | Shopping mall | Public square | Urban park | Traffic street | Construction site | Bar |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | DeepTone | 0.644 | Report | 0.670 | 0.753 | 0.910 | 0.650 | 0.500 | 0.170 | 0.520 | 0.780 | 0.580 | 0.910 |

| 2 | Kawamura_TMU | 0.628 | Report | 0.430 | 0.800 | 0.860 | 0.600 | 0.440 | 0.680 | 0.613 | 0.660 | 0.440 | 0.760 |

| 3 | ALPS | 0.613 | Report | 0.440 | 0.693 | 0.920 | 0.750 | 0.580 | 0.040 | 0.700 | 0.650 | 0.510 | 0.850 |

| 4 | Masayuki-sera-TMU | 0.586 | Report | 0.420 | 0.667 | 0.830 | 0.690 | 0.400 | 0.240 | 0.627 | 0.540 | 0.760 | 0.690 |

| * | *Baseline* | 0.582 | Report | 0.580 | 0.513 | 0.770 | 0.580 | 0.480 | 0.260 | 0.640 | 0.610 | 0.500 | 0.890 |

| 5 | HAI-LAB | 0.539 | Report | 0.060 | 0.860 | 0.910 | 0.520 | 0.490 | 0.370 | 0.440 | 0.600 | 0.500 | 0.640 |

| 6 | ditlab | 0.522 | Report | 0.530 | 0.640 | 0.830 | 0.520 | 0.430 | 0.190 | 0.700 | 0.730 | 0.560 | 0.090 |

| 7 | gisp | 0.500 | Report | 0.140 | 0.460 | 0.670 | 0.640 | 0.520 | 0.480 | 0.413 | 0.650 | 0.500 | 0.530 |

| 8 | Li_NTU | 0.493 | Report | 0.450 | 0.533 | 0.600 | 0.690 | 0.530 | 0.030 | 0.533 | 0.640 | 0.490 | 0.430 |

| 9 | Audio_IIT Mandi | 0.341 | Report | 0.020 | 0.113 | 0.620 | 0.170 | 0.050 | 0.400 | 0.780 | 0.500 | 0.460 | 0.300 |

The top 4 teams are invited to submit the paper to the APSIPA ASC 2025. GC Papers should be submitted via the APSIPA ASC 2025 CMT system, APSIPAASC 2025 Grand Challenge track by August 18 (AOE time). More information can be found at APSIPA Submission Guidelines.

| Date(AoE Time) | Event |

|---|---|

| June 15, 2025 | Challenge launch Baseline system & Development metadata release |

| July 22, 2025 | Evaluation metadata release |

| Final result & Code submission | |

| Results announcement | |

| Special session submission deadline of GC paper | |

| Acceptance notification of GC paper | |

| August 31, 2025 | Submission Deadline of Camera Ready Paper |

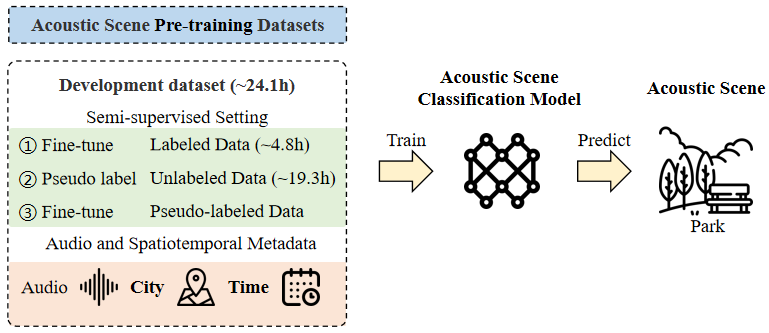

The APSIPA ASC 2025 GC (City and Time-Aware Semi-supervised Acoustic Scene Classification) extends the work of the ICME 2024 GC (Semi-supervised Acoustic Scene Classification under Domain Shift), which addressed the challenge of generalizing across different cities. This year's challenge explicitly incorporates city-level location and timestamp metadata for each audio sample, encouraging participants to design models that leverage both geographic and temporal context. It maintains the semi-supervised learning setting, reflecting real-world scenarios where large amounts of unlabeled data coexist with limited labeled examples. Participants are invited to develop innovative methods that combine audio content with contextual information to enhance classification performance and robustness.

For the APSIPA ASC 2025 grand challenge "City and Time-Aware Semi-supervised Acoustic Scene Classification", we provide a development dataset comprising approximately 24 hours of audio recordings from the Chinese Acoustic Scene (CAS) 2023 dataset. This challenge introduces previously unutilized contextual metadata that accompanies each recording:

City information: Identification of the recording location among 22 diverse Chinese cities (e.g., Xi'an, Beijing, Shanghai)

Timestamp information: Precise recording time accurate to year, month, day, hour, minute, and second

The CAS 2023 dataset is a large-scale dataset that serves as a foundation for research related to environmental acoustic scenes. The dataset includes 10 common acoustic scenes, with a total duration of over 130 hours. Each audio clip is 10 seconds long with metadata about the recording location and timestamp. The data collection spanned from April 2023 to September 2023, covering 22 different cities across China.

Acoustic scenes (10): Bus, Airport, Metro, Restaurant, Shopping mall, Public square, Urban park, Traffic street, Construction site, Bar

More details can be found at https://arxiv.org/abs/2402.02694.

The audio recordings of development dataset can be found at https://zenodo.org/records/10616533.

The audio recordings of evaluation dataset can be found at https://zenodo.org/records/10820626.

Metadata of development and evaluation datasets can be found at https://github.com/JishengBai/APSIPA2025GC-ASC/tree/main/metadata.

The baseline system for the APSIPA ASC 2025 GC "City and Time-Aware Semi-supervised Acoustic Scene Classification" challenge is based on a multimodal semi-supervised framework with a pre-trained SE-Trans model. Baseline codes are released at https://github.com/JishengBai/APSIPA2025GC-ASC. Systems will be ranked by macro-average accuracy (average of the class-wise accuracies). If two teams got the same score on the evaluation dataset, the team with the smaller model size will be ranked higher.

Participants must adhere to the following terms and conditions. Failure to comply will result in disqualification from the Challenge.

The model should not be trained on any data from the evaluation dataset.

Participants are required to submit the results by the challenge submission deadline.

To ensure the results can be reproduced by the organizers, the submission should include:

1) A .csv file including the classification results for each audio in the evaluation set with the following template:

| filename | scene_label |

|---|---|

| 49f03553cf0a4744ac2af9fa55703bb321_2 | Bus |

2) Inference codes and trained models for evaluation by the organizers.

3) Comprehensive documentation, instructions, or other relevant information to facilitate the execution of the codes by the organizers.

4) A technical report (*.pdf) explaining the method in sufficient detail (2-6 pages including references). This report will be publicly available on the challenge website. The APSIPA ASC paper template is recommend.

All files should be packaged into a zip file for submission. You can submit your final results through the Google Form. Each team is limited to submitting only ONE system. If multiple submissions are made, only the last submission before the deadline will be considered. Please carefully provide the correct information: Team Name, Institute, Team Leader and Member(Last name, First name) and E-mail.